For more than a century, human drivers have relied on their eyes, ears, and reflexes to navigate roads. Today, with the arrival of fully driverless cars, those biological sensors are being replaced by an array of advanced technologies that allow machines to perceive their surroundings in extraordinary detail. From laser-based lidar scanners to high-definition cameras, from radar units that see through fog to microphones that hear sirens, these sensors in driverless cars work together to give an autonomous vehicle a comprehensive and redundant view of the world.

The year 2025 marks a turning point: several companies, including Waymo, Zoox, Cruise, and Baidu Apollo, are moving beyond pilot projects into scaled deployments of Level 4 and Level 5 autonomous vehicles. These robotaxis and shuttle pods are not experimental curiosities anymore; they are increasingly expected to operate in complex city environments with minimal human intervention. But what makes them trustworthy enough to carry passengers safely? The answer lies in the fusion of multiple sensors.

Why are So Many Sensors in Driverless Cars Needed?

If humans can drive with just two eyes and two ears, why do driverless cars require up to a dozen different sensor types mounted all around the vehicle? The reason is reliability. Each sensor has unique strengths, but also weaknesses. Cameras, for instance, excel at recognizing road signs but can be blinded

by glare. Radar works well in rain but has limited resolution. Lidar provides precise 3D mapping, but can

be expensive and attenuated by heavy snow.

Instead of betting on one technology, engineers design multimodal perception systems where different sensors complement each other. This redundancy ensures that if one fails due to weather, lighting, or occlusion, another can still provide critical information. In fact, safety regulators expect driverless cars to degrade gracefully—never going “blind” even if one channel of perception is lost.

Lidar: The Backbone of 3D Perception

Among all the sensors, lidar (Light Detection and Ranging) is often considered the backbone of autonomy. By emitting laser pulses and measuring their reflections, lidar builds a highly accurate 3D point cloud of the surroundings. Unlike cameras, lidar does not rely on ambient light, so it performs consistently both day and night.

Waymo’s latest sixth-generation autonomous vehicle platform, for example, uses multiple lidars to cover

long-, mid-, and short-range distances. This enables the system to see vehicles hundreds of meters away,

as well as pedestrians close to the curb. Companies like Zoox integrate lidars into the corners of their vehicles for full 360° visibility.

Lidar Sensor

The industry trend in 2025 is moving towards solid-state and FMCW (Frequency-Modulated Continuous Wave) lidars, which promise lower costs, better durability, and even the ability to measure object velocity directly. While once costing tens of thousands of dollars, lidars are now being produced at scale for just a fraction of that, making them practical for fleet deployment.

Radar: The All-Weather Workhorse

While lidar excels in geometry, radar (Radio Detection and Ranging) provides unmatched robustness in adverse weather. Radar waves penetrate rain, fog, and snow, giving vehicles a reliable way to detect objects when cameras or lidars struggle.

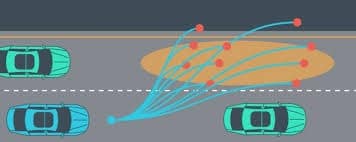

Traditional automotive radar has long been used in adaptive cruise control and collision avoidance. But for full autonomy, next-generation 4D imaging radar has become essential. These radars not only measure range and velocity but also add elevation and high-resolution angular data, producing something close to a low-resolution 3D image.

Radar Sensor

In a driverless car, radar plays a crucial role in tracking moving objects—vehicles, cyclists, pedestrians—and in predicting their velocity and trajectories. When fused with lidar and camera data, radar ensures that the vehicle never loses awareness of fast-approaching objects, even in a storm. Please refer to this link to know more about the Radar Sensor.

Cameras: The Semantic Eyes of the Vehicle

If lidar and radar provide structure and motion, cameras provide semantics. Cameras recognize the meaning of road scenes: traffic lights, stop signs, lane markings, construction cones, and even subtle cues such as a pedestrian’s body language.

Modern driverless cars are fitted with high-dynamic-range (HDR) cameras that can handle extreme lighting conditions, as well as wide-angle and telephoto lenses to capture details across distances. Waymo’s robotaxis, for instance, feature over a dozen cameras strategically placed around the vehicle to

achieve complete coverage.

HDR Camera Sensors in Driverless Cars

In addition, some fleets integrate polarized cameras that reduce glare from wet roads, and near-infrared

(NIR) cameras that extend visibility in low-light conditions. Without cameras, a driverless car would struggle to interpret the symbolic rules of the road.

Thermal and Infrared Cameras: Seeing the Unseen

A growing addition to the sensor suite in 2025 is thermal infrared imaging. Unlike visible cameras, thermal sensors detect heat signatures. This makes them particularly effective for spotting pedestrians, animals, or cyclists in the dark or through glare.

Thermal and Infrared Cameras

Studies have shown that combining thermal images with RGB cameras dramatically improves detection accuracy at night. Automakers are increasingly considering thermal cameras as a standard feature in urban robotaxis, especially in cities with high nighttime pedestrian activity. Please refer to know more about – Thermal Sensor.

Ultrasonic Sensors: Close-Range Guardians

While lidar, radar, and cameras handle long- and mid-range perception, ultrasonic sensors take care of the near field. Operating at very short distances, they detect curbs, small obstacles, or other vehicles during tight maneuvers like parking or docking.

Ultrasonic Sensors in Driverless Cars

Although some consumer carmakers have started phasing out ultrasonics in favor of vision-only approaches, they remain valuable in fully driverless cars where passenger safety around vehicle doors and tight spaces is paramount.

Please refer to this link to know more about – Ultrasonic Sensor.

Listening to the Road: External Microphones

Driverless cars don’t just see—they also listen. External microphone arrays, sometimes called “EARS,” they are designed to detect emergency vehicle sirens and horns long before they appear visually. Waymo, for instance, integrates microphones on its vehicles to determine the direction of approaching ambulances or fire trucks, allowing the car to yield proactively.

External Microphones in Driverless Cars

This auditory capability ensures that driverless vehicles behave as courteous road users in unpredictable

urban environments. GNSS, RTK, IMU, and Wheel Encoders: Knowing Where You Are Perception is useless without precise localization. To know exactly where they are on a map, sensors in driverless cars rely on Global Navigation Satellite Systems (GNSS) such as GPS. However, standard GPS alone is insufficient in urban areas with tall buildings that cause signal reflections.

To address this, fleets use RTK (Real-Time Kinematic) corrections, which improve positioning to within a few centimeters. This is further combined with Inertial Measurement Units (IMUs)— gyroscopes and accelerometers that track motion—and wheel encoders that measure distance traveled. By fusing these inputs, an autonomous car can dead-reckon its path even when satellite signals are temporarily lost, such as in tunnels or dense city centers.

HD Maps: The Invisible Sensor

One often overlooked “sensor” is the high-definition (HD) map. Unlike consumer navigation maps, HD Maps are centimeter-level accurate and contain rich information about road geometry, lane-level markings, traffic signal locations, and even curb heights.

HD Maps

These maps act as a prior knowledge layer: the car doesn’t have to rediscover the road from scratch each time, but instead aligns its live sensor data against the map. Companies like Mobileye use millions of consumer vehicles to crowdsource updates, keeping HD maps fresh and accurate.

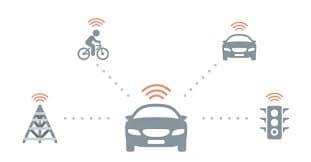

V2X Communication: Talking to the World

Finally, there is Vehicle-to-Everything (V2X) communication, which allows cars to exchange information with infrastructure, pedestrians, and other vehicles. Imagine a driverless car approaching an intersection where an ambulance is coming from around the corner. V2X can warn the vehicle before any sensor can physically detect the siren or flashing lights.

V2X Communication

In 2024, regulatory bodies cleared spectrum for Cellular V2X (C-V2X), paving the way for broader deployment in 2025. As more cities adopt connected infrastructure, V2X will become a crucial complement to onboard sensors, enabling safer cooperative driving.

Real-World Fleets: Who Uses What

Different companies balance their sensor choices differently.

- Waymo relies on a rich mix: 13 cameras, 4 lidars, 6 radars, and external microphones.

- Zoox, Amazon’s robotaxi division, places lidar and radar units at the corners of its bidirectional vehicle for overlapping coverage.

- Cruise has also deployed multiple lidar and radar units, though it has recently scaled back

operations to refine safety. - Across the board, one pattern holds: no major robotaxi fleet in 2025 relies solely on one type of sensor.

Manufacturing and Calibration: A Hidden Challenge

It’s not just about having the right sensors—it’s about calibrating them. Robotaxi factories now feature specialized tunnels where lidars are aligned, cameras are tested against visual targets, radars are checked for accuracy, and microphones are exposed to simulated sirens. Zoox’s California facility even includes artificial rain bays to test sensors under weather conditions. Without rigorous calibration, even the most advanced sensor can produce errors that compromise safety.

Costs and Trade-offs: Sensor-Rich vs Vision-Only

One of the biggest debates in the industry is whether fully driverless cars need all these sensors or whether cameras alone could suffice. Companies like Tesla champion vision-only approaches, claiming that neural networks can learn to interpret the world with cameras alone. Others, like Waymo and Zoox,

argue that redundancy is essential for safety.

Currently, a sensor-rich suite for a robotaxi may cost in the low tens of thousands of dollars per vehicle. Vision-only approaches are cheaper, but they have yet to prove the same level of reliability in dense, unpredictable city environments.

The Road Ahead: Emerging Trends

Looking toward the future, several sensor trends are shaping the next generation of driverless cars:

- FMCW lidar for better velocity measurement and interference immunity.

- 4D imaging radar is becoming mainstream, not just for highways but for full urban coverage.

- Thermal cameras are gaining wider adoption in cities with heavy night traffic.

- Dynamic HD maps are continuously refreshed using crowdsourced data.

- Cooperative perception via V2X, where vehicles and infrastructure share sensor data to extend visibility.

- Quantum sensor: The future sensor of autonomous vehicles. This sensor would soon revolutionize the entire automation industry.

Conclusion: Diversity and Redundancy Win the Race

Fully driverless cars succeed not because of one magic sensor, but because many sensors cooperate, cross-check, and cover each other’s blind spots—and because maps, radios, and localization glue the whole system into a coherent, predictive brain. The 2025 state of the art is sensor-rich and fusion-heavy,

With growing help from 4D radar, thermal imaging, fresh HD maps, and V2X.