NVIDIA introduced the Jetson family, a part of NVIDIA’s embedded systems, designed to provide the required hardware & software to develop AI-powered systems and devices. There are different types of NVIDIA Jetson boards, like Jetson TX2 Series, Jetson Xavier NX, Jetson Nano, AGX Xavier Series, Xavier NX Developer Kit & AGX Xavier Industrial. The Jetson Nano was introduced by NVIDIA in 2019 as a low-cost alternative to other Jetson boards. Thus, this board is specially targeted to provide … [Read more...]

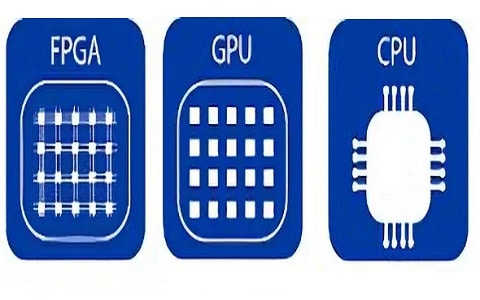

FPGA vs GPU vs TPU : Best Hardware for AI Applications

Artificial Intelligence (AI) applications require massive computational power to handle complex mathematical operations like matrix multiplications, deep learning model training, and inference. Over the last decade, three major hardware architectures have become central to AI workloads: Field-Programmable Gate Arrays (FPGAs), Graphics Processing Units (GPUs), and Tensor Processing Units (TPUs). Each of these platforms offers distinct advantages and trade-offs in terms of speed, flexibility, … [Read more...]